Earable SSI

Earable-based Silent Speech Interface

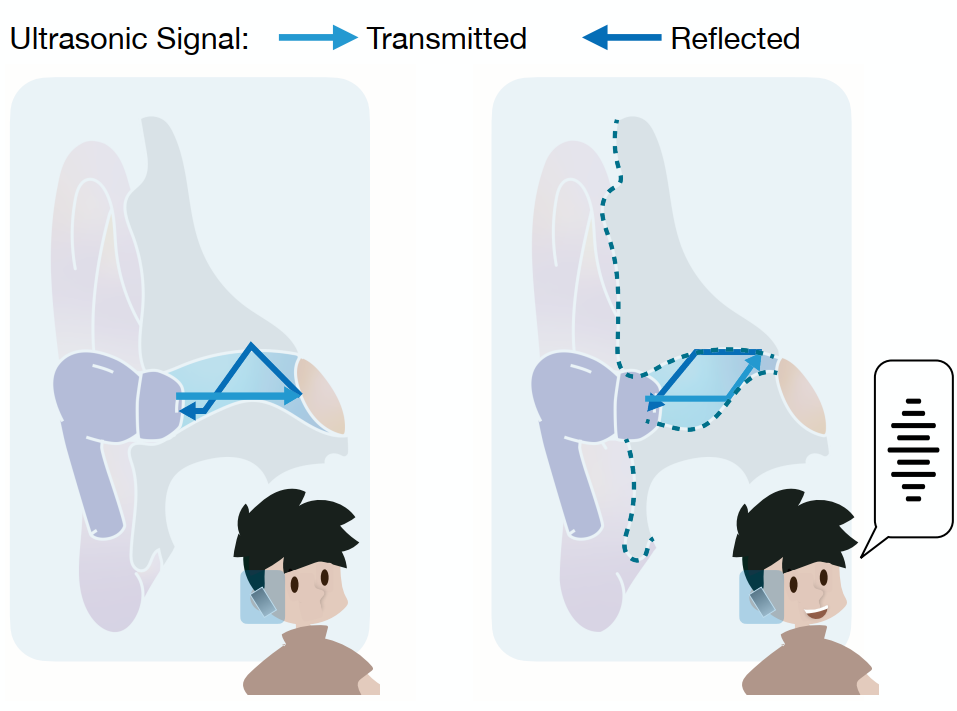

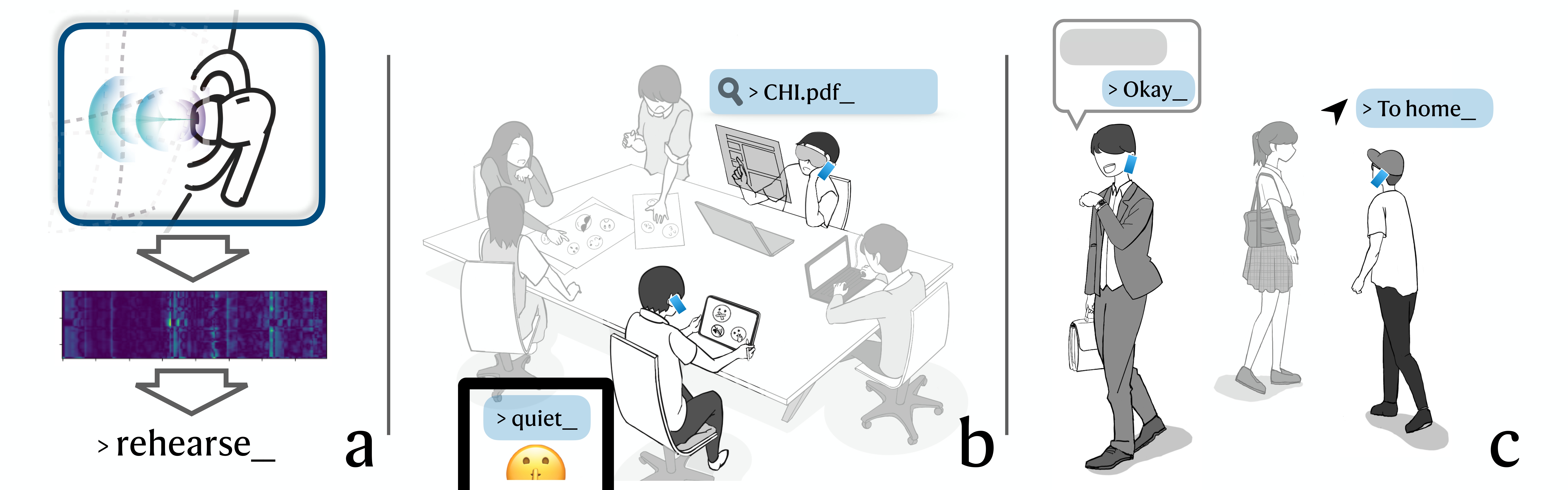

Silent speech interaction (SSI) allows users to discreetly input text without using their hands. Existing wearable SSI systems typically require custom devices and are limited to a small lexicon, limiting their utility to a small set of command words. This work proposes ReHEarSSE, an earbud-based ultrasonic SSI system capable of generalizing to words that do not appear in its training dataset, providing support for nearly an entire dictionary’s worth of words (Dong et al., 2024). As a user silently spells words, ReHEarSSE uses autoregressive features to identify subtle changes in ear canal shape. ReHEarSSE infers words using a deep learning model trained to optimize connectionist temporal classification (CTC) loss with an intermediate embedding that accounts for different letters and transitions between them. We find that ReHEarSSE recognizes unseen words with an accuracy of 89.3 ± 10.9%.

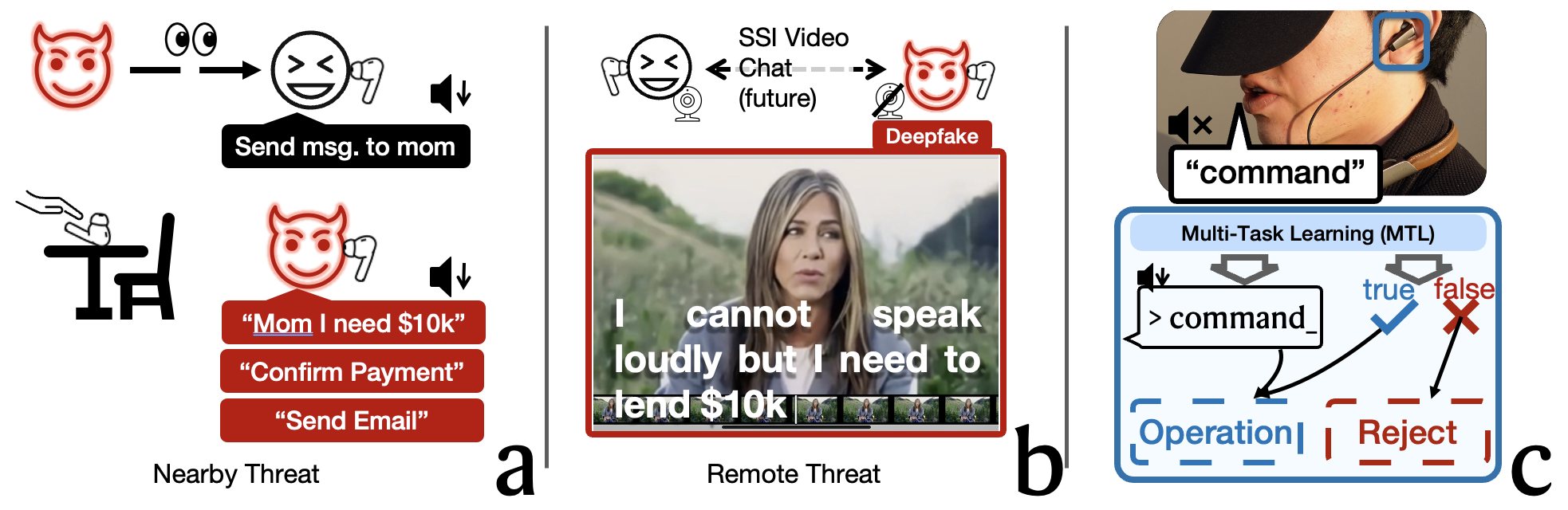

While users tend to prefer SSI over traditional speech recognition in public contexts, prior SSI-related works hardly consider the safety issues. While normal voice-based authentication mitigates these risks by verifying the speaker’s identity before granting access, it can be vulnerable to replay and injection attacks (e.g., triggering Siri via a loudspeaker), leaving an imperative need for developing a reliable SSI system. We analyzed and found that the silent speech recognition task and the speaker authentication task are correlated rather than independent. The inherent structure uniqueness of each individual’s ear canal creates distinct acoustic propagation paths, so that subtle ear canal deformations encode both the utterance content and speaker identity.

In this work, we enable reliable SSI by proposing HEar-ID(Dong et al., 2025), which only leverages a commodity active noise-canceling earbud to emit an inaudible OFDM signal and record both ultrasonic reflections and whisper audio to enable silent spelling input (e.g. /i: eI Ar/ for the word “ear”) and user verification with a single machine learning model. In the preliminary experiments, HEar-ID consistently delivered promising results: for 11 participants, the system reliably rejected impostors with a false positive rate (FPR) of 3.2% and a true positive rate (TPR), accompanying 90.25% Top-1 word recognition accuracy for eight of them.

References

2025

- UbiComp’25🏆 Poster: Recognizing Hidden-in-the-Ear Private Key for Reliable Silent Speech Interface Using Multi-Task LearningIn UbiComp Companion ’25 , Oct 2025Best Poster Award